In the past decade, technology breakthroughs have revolutionized the lives of the hearing impaired. Discover advancements like live captions, digital hearing aids, cochlear implants, and smart home devices that are transforming accessibility. Learn how AI and machine learning enhance communication and quality of life for those with hearing impairments. Explore the impact of these innovations and how they are changing lives.

Read moreThe Best External Wireless Microphones for Your Event Livestream with Live Caption AI

The Best External Wireless Microphones for Your Event Livestream with Live Caption AI

Read moreThe Best External Wired Microphones for Your Event Livestream with Live Caption AI

The Best External Wired Microphones for Your Event Livestream with Live Caption AI

Read moreHow AI Voiceover Generators are Revolutionizing Content Creation

In the ever-evolving landscape of content creation, staying ahead of the curve means embracing the latest technologies. One such technology making significant waves is the AI voiceover generator.

These tools are transforming how creators produce and deliver content, offering a range of benefits from efficiency and cost-effectiveness to enhanced accessibility and personalization. This blog explores how AI voiceover generators are revolutionizing content creation across various industries.

Speed and Efficiency

One of the most significant advantages of an AI voiceover generator is the speed at which they can produce high-quality voiceovers. Traditional voiceover production involves hiring voice actors, booking studio time, and conducting multiple recording sessions to achieve the desired result. This process can be time-consuming and costly.

AI voiceover generators, on the other hand, can produce professional-grade voiceovers in a matter of minutes. This rapid turnaround time allows content creators to meet tight deadlines and stay agile in a fast-paced digital environment.

An example workflow could be:

Transcribe your thoughts with Live Caption AI. Speaking the core points of what you want your script to be about.

Copy and paste your text into an AI tool like Jasper.ai, and have it create a more professional-sounding script for your voiceover to read.

Use Invideo to generate the voiceover for your video.

Cost-Effectiveness

Hiring professional voice actors and securing studio time can be expensive, especially for smaller content creators or startups with limited budgets. AI voiceover generators offer a cost-effective alternative, providing high-quality voiceovers at a fraction of the cost.

This democratizes access to professional voiceover services, enabling more creators to enhance their content with engaging audio without breaking the bank.

Consistency and Quality

Maintaining consistency in voiceovers can be challenging, especially for long-term projects or series where the same voice needs to be replicated accurately over time. AI voiceover generators ensure uniformity in tone, pitch, and delivery, providing a consistent auditory experience for the audience.

These tools are equipped with advanced algorithms that mimic human speech patterns, producing natural-sounding voiceovers that are nearly indistinguishable from those recorded by human actors.

Accessibility and Inclusivity

AI voiceover generators are also making content more accessible to diverse audiences. For example, generating voiceovers in multiple languages is significantly easier with AI. This capability allows creators to reach a global audience by offering content in various languages, breaking down language barriers, and expanding their reach.

AI voiceovers can be used to create audio descriptions for visually impaired audiences, enhancing inclusivity and ensuring that content is accessible to everyone.

Moreover, applications such as Live Caption AI offer real-time audio transcription to enhance caption quality for your YouTube content or text-based articles.

Personalization and Customization

Personalization is a key trend in content creation, and AI voiceover generators excel in this area. These tools can generate voiceovers tailored to specific audience segments, enhancing the personalization of content.

For instance, marketing videos can feature voiceovers that address individual customers by name or tailor messages based on their preferences and behaviors. This level of customization helps create a more engaging and personalized experience for the audience.

Enhancing E-Learning and Online Courses

The e-learning industry has seen a significant boost from AI voiceover generators. Creating engaging and professional voiceovers for online courses can enhance the learning experience and improve information retention.

AI tools can quickly generate voiceovers for educational content, allowing educators and instructional designers to focus on creating high-quality, informative material without worrying about the complexities of voiceover production. This technology also facilitates the creation of multilingual courses, making education accessible to a broader audience.

Boosting Social Media and Marketing Content

In the realm of social media and digital marketing, standing out is crucial. AI voiceover generators can help create compelling and attention-grabbing content that captures the audience's interest.

From promotional videos and product demos to engaging social media posts, AI voiceovers add a professional touch that can enhance the overall quality of the content. The ability to produce high-quality voiceovers quickly also means that marketers can respond to trends and events in real time, keeping their content relevant and timely.

Supporting Independent Filmmakers and Content Creators

Independent filmmakers and small content creators often face budget constraints that limit their ability to hire professional voice actors. AI voiceover generators provide an affordable solution, enabling these creators to produce high-quality voiceovers for their projects.

This technology opens up new possibilities for indie films, web series, and other creative projects, allowing them to compete with larger productions in terms of audio quality and engagement.

Overcoming Challenges and Ethical Considerations

While AI voiceover generators offer numerous benefits, they also present certain challenges and ethical considerations. Ensuring the authenticity and emotional expression of AI-generated voiceovers can be challenging, as the nuances of human emotion are difficult to replicate. Additionally, the use of AI voiceovers raises questions about job displacement for voice actors and the ethical implications of using AI-generated voices.

To address these concerns, it's important for creators to use AI voiceover generators responsibly and transparently. Balancing the use of AI with human talent can help maintain the quality and authenticity of voiceovers while leveraging the efficiencies offered by AI technology.

Future Trends and Developments

The future of AI voiceover generators looks promising, with ongoing advancements in AI and machine learning set to further enhance their capabilities. We can expect even more natural-sounding voiceovers, improved emotional expression, and greater customization options. As AI solutions continue to evolve, it will likely become an integral part of the content creation process, offering new opportunities for innovation and creativity.

Conclusion

AI voiceover generators are revolutionizing content creation by offering speed, cost-effectiveness, consistency, and enhanced accessibility. These tools are transforming how creators produce and deliver content, enabling them to reach broader audiences and create more engaging and personalized experiences.

While there are challenges and ethical considerations to address, the benefits of AI voiceover generators are undeniable. As technology continues to advance, AI voiceovers will play an increasingly central role in the future of content creation, opening up new possibilities for creators across various industries.

Boost Engagement: Why Every Creator Needs a Video Caption Generator

Discover how video caption generators can boost engagement, enhance accessibility, and expand your audience. Transform your content creation and sharing today!

Read moreLive Caption, Inc. Unveils Revolutionary Upgraded App to Transform Communication for the Hearing Impaired

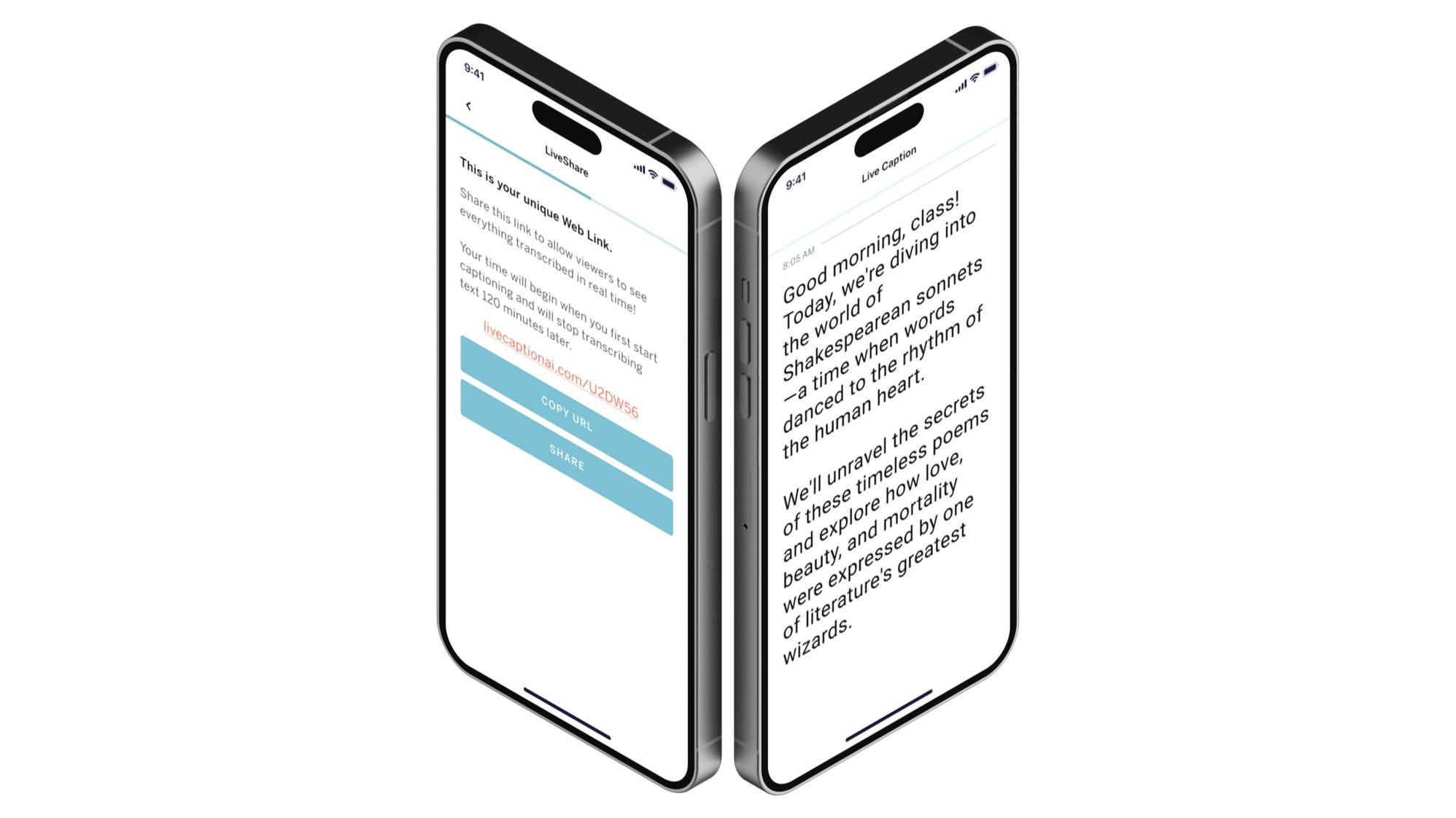

Brooklyn, NY — [March 22, 2024] — Live Caption, Inc., a pioneering company specializing in speech-to-text technology, is thrilled to announce the launch of its new, highly anticipated app, Live Caption AI. This upgrade represents a significant leap forward in helping individuals with hearing loss connect in real-time conversations, furthering the company's mission to eliminate communication barriers.

In a remarkable evolution from its original app launched in 2011, the new Live Caption AI app brings to the table advanced AI language models that enhance the accuracy and speed of real-time captions for users and allow for the streaming of real-time captions to other devices. This groundbreaking technology allows users to broadcast their words to an audience efficiently for seamless communication in personal conversations, live events, educational settings, or religious gatherings.

"Since our inception, our goal has been to use technology to facilitate communication for those with hearing impairments and break down communication barriers worldwide," said Ryan Flynn, founder of Live Caption, Inc. "With this newly upgraded app, we are not just advancing technology; we are revolutionizing how people interact, making every conversation accessible and inclusive."

The Live Caption AI app is the first to leverage sophisticated cloud-based processing, ensuring users receive instant captions without lag, a critical feature in live communication scenarios. Furthermore, the app's innovative "LiveShare" feature allows users to stream live-captioned conversations with others, amplifying its impact in various settings.

"We're not just stopping here," Ryan Flynn continued. "Our vision is to explore and unlock endless possibilities that our technology can offer. From educational seminars to religious sermons, our app can bridge communication gaps in numerous domains."

The Live Caption AI app is currently available for download on Google Play and the Apple App Store, free of charge, as the company remains steadfast in its commitment to making communication accessible to all. Live Caption AI invites you to experience the future of conversation, where every word is captured, and no voice is left unheard.

For additional information about Live Caption AI and to download the app, please visit www.LiveCaptionAI.com.

About Live Caption, Inc.

Founded in 2016, Live Caption, Inc. has relentlessly pursued innovations in speech-to-text technology to assist individuals with hearing loss. The company's latest offering, the upgraded Live Caption AI app, sets a new standard for real-time transcription, enabling clearer and more productive conversations across the globe.

Contact Information

Live Caption, Inc.

Brooklyn, NY

Media Contact: Ryan Flynn

Phone: +1-347-455-0668

Email: info@livecaptionapp.com

Website: www.LiveCaptionAI.com

Grandparents in The Digital Age: How Tech is Bringing Families Together

Author: Evelyn James

evelyn.james@harbourmail.co.uk

Read moreGoogle Slides gains automated real-time subtitles when presenting

In theory, slides should only convey the key points of a presentation and are not the presenter’s words verbatim. However, having a word-for-word transcript can be useful, especially for those that are hard of hearing. Google Slides is now adding real-time closed captions to help with accessibility.

When giving a presentation in Google Slides, a new “Captions” option in the toolbar can be selected. It enables the microphone on the presenting computer to transcribe in real-time what you’re saying alongside each slide.

Appearing at the bottom of the screen, machine learning is used to generate the caption, with Google noting how accent, voice modulation, and intonation all affect the quality of the transcription. The company is working to improve caption quality overtime.

Recently, an internal hackathon led us to work on a project that is deeply personal. Upon observing that presentations can be challenging for individuals who are deaf or hard of hearing to follow along, we both teamed up with the idea to add automated closed captions to G Suite’s presentation tool, Google Slides.

This Google Slides feature was born out of an hackaton at Google, but has other use cases, including presenting in noisy environments, poor audio equipment, and for non-native language speakers.

Closed captioning in Slides can help audience members like Laura who are deaf or hard of hearing, but it can also be useful for audience members without hearing loss who are listening in noisy auditoriums or rooms with poor sound settings. Closed captioning can also be a benefit when the presenter is speaking a non-native language or is not projecting their voice.

At the moment, this accessibility feature is optimized for one user presenting at a time, while only U.S. English is currently supported. Quality might vary if there are multiple presenters using different computers.

Meanwhile, it works on both local presentations and those performed over video conferencing software with captions appearing on the shared screen. Desktop Chrome on Mac, Windows, Linux, or Chrome OS is also required. Captions in Google Slides will be available in more languages overtime.

Microsoft adds live captions to Skype and PowerPoint

The programs also support live subtitles in multiple languages.

Read moreYouTube’s AI can now describe sound effects

http://www.theverge.com/2017/3/24/15053328/youtube-ai-caption-sound-effects

YouTube’s AI can now describe sound effects

by Micah Singleton . @MicahSingleton Mar 24, 2017, 3:17pm EDT

YouTube has had automated captions for its videos since 2009, and now it’s expanding the feature to include captions for sound effects. The video service uses machine learning to detect sound effects in videos and add the captions [APPLAUSE], [MUSIC], and [LAUGHTER] to millions of videos.

While those three were some of the most frequent manually captioned sounds, YouTube says it’s only in the early stages of making improvements for its deaf and hard of hearing users. The company says captions like ringing, barking, and knocking are next in line, but those require more deciphering than simple laughter or music.

The improved captions are now available on YouTube.

IBM edges closer to human speech recognition

http://www.businessinsider.com/ibm-edges-closer-to-human-speech-recognition-2017-3

This story was delivered to BI Intelligence Apps and Platforms Briefing subscribers. To learn more and subscribe, please click here.

IBM has taken the lead in the race to create speech recognition that's in parity with the error rate of human speech recognition.

As of last week, IBM’s speech-recognition team achieved a 5.5% error rate, a vast improvement from its previous record of 6.9%.

Digital voice assistants like Apple's Siri and Microsoft's Cortana must meet or outdo human speech recognition — which is estimated to have an error rate of 5.1%, according to IBM — in order to see wider consumer adoption. Voice assistants are expected to be the next major computing interface for smartphones, wearables, connected cars, and home hubs.

While digital voice assistants are far from perfect, competition among tech companies is bolstering overall voice-recognition capabilities, as tech companies vie to outdo one another. IBM is locked in a race with Microsoft, which last year developed a voice-recognition system with an error rate of 5.9%, according to Microsoft's Chief Speech Scientist Xuedong Huang; this beat IBM by an entire percentage point.

Despite progress, however, existing methods to study voice recognition lack an industry standard. This makes it difficult to truly gauge advances in the technology. IBM tested a combination of “Long Short-Term Memory” (LSTM), a type of artificial neural network, and Google-owned DeepMind’s WaveNet language models, against SWITCHBOARD, which is a series of recorded human discussions. And while SWITCHBOARD has been regarded as a benchmark for speech recognition for more than two decades, there are other measurements that can be used that are regarded as more difficult, like “CallHome," which are more difficult for machines to transcribe, IBM notes. Using CallHome, the company achieved a 10.3% error rate.

Moreover, voice assistants need to overcome several hurdles before mass adoption occurs:

- They need to surpass “as close as humanly possible." Despite recent advancements, speech recognition needs to reach roughly 95% for voice to be considered the most efficient form of computing input, according to Kleiner Perkins analyst Mary Meeker. That’s because expectations for automated services are much less forgiving than they are for human error. In fact, when a panel of US smartphone owners were asked what they most wanted voice assistants to do better, "understand the words I am saying" received 44% of votes, according to MindMeld.

- Consumer behavior needs to change. For voice to truly replace text or touch as the primary interface, consumers need to be more willing to use the technology in all situations. Yet relatively few consumers regularly employ voice assistants; just 33% of consumers aged 14-17 regularly used voice assistants in 2016, according to an Accenture Report.

- Voice assistants need to be more helpful. Opening third-party apps to voice assistants will be key in providing consumers with a use case more in line with future expectations of a truly helpful assistant. Voice assistants like Siri, Google Assistant, and Echo, are only just beginning to gain access to these apps, enabling users to carry out more actions like ordering a car.

New app gives captions to Google+ Hangouts

05/22/2013

Hangout Captions is an app that connects live transcription services directly into a Google+ Hangout, improving accessibility for participants who are deaf or hard of hearing.

Have you tried it yet? Please leave a review with your experience!

https://hangout-captions.appspot.com/

The Google Hangout that will change the way I will view communication forever

07/11/2012

Last week, I had the great honor to speak with three very awesome people in a Google+ Hangout: Christian Vogler, director of the Technology Access Program at Gallaudet, Andrew Phillips, policy counsel at the NAD and Willie King, the director of product management atZVRS.

These three folks have one thing in common – they are deaf.

I can’t understand sign language, and I speak way too fast for anyone to be able to read my lips. How did it go without a hitch? Thanks to a Google+ Hangout app announced by the Google Accessibility team last week, a sign language expert and a fantastic CART transcriptionist, Laura Brewer. All in real-time, all virtually.

Full story...

http://thenextweb.com/insider/2012/07/11/the-google-hangout-that-changed-the-way-i-will-view-communication-forever/

Launching a Business in 54 Hours

December 23, 2011 | 4:04 pm by Anne Fisher

“I was skeptical at first about whether you can really accomplish much in just one weekend,” said Ryan Flynn, a graphic designer who worked on voice-recognition technologies at Motorola before moving to New York from Chicago in 2010. Mr. Flynn is the founder of a fledgling enterprise called Closed Capp, whose first product is a mobile app for real-time closed captioning, aimed at giving the hearing-impaired access to cellphone conversations.

Mr. Flynn’s initial doubts soon dissolved: Startup Weekend introduced him to two fellow techies who “helped me figure out a workable business model and refine the technology,” he said. “It was great to have two solid days to focus on solutions to things I’d been stuck on. We had a working prototype by the end of the weekend.” He also had several promising conversations with interested investors, he added....

Read more: http://mycrains.crainsnewyork.com/blogs/executive-inbox/2011/12/launching-a-business-in-54-hours/#.TvTC0YexVzQ.email

VR on Android's Ice Cream Sandwich is Awesome

12/22/2011

From Boston.com/business

...It’s a pretty substantial upgrade, as significant as Apple Inc.’s recent rollout of its improved iPhone software. Up to now, Google has delivered separate versions of Android for phones and tablet computers. Ice Cream Sandwich combines valuable features from both versions and adds a lot of welcome improvements.

One of the biggest is in speech recognition. No, Android phones still haven’t caught up with Apple’s voice-controlled personal assistant Siri. But Ice Cream Sandwich makes it far easier to dictate e-mails and text messages. With earlier versions, you basically spoke one phrase or sentence, then waited for the software to do its stuff. Now, the process is continuous. Just keep talking, and remember to verbally add punctuation marks, like “comma’’ and “period.’’ The software transcribes sentence after sentence with surprising speed and impressive accuracy. It’s so good that you might start dictating all your text communications....

This upgrade works with Closed Capp and is awesome once you get used to speaking your punctuation. So exciting!

http://www.boston.com/business/technology/articles/2011/12/22/tasty_ice_cream_sandwich_from_google/

Closed Capp update - Now with Keyboard Entry

12/08/2011

We updated the Android App yesterday to include a keyboard entry mode as well. A new button on the screen easily switches you back and forth between modes so you can continue the conversation easily. Text that you type appears large on screen for others to read.

If you have used Closed Capp in your daily life, we would love to hear about your experience. Please send your stories to ClosedCAPPtioned@gmail.com or find us on Twitter (@ClosedCapp) and Facebook.

Closed Capp wins the Twilio Sponsors' Prize for Startup Weekend NYC!

11/23/2011

Closed Capp was chosen to win the Twilio Sponsors' Prize for Startup Weekend NYC, November 20, 2011. Thanks to Mason Du and Seth Hosko for helping push this idea forward, and thank you to http://www.twilio.com for the award!

It was a great weekend and we were able to make this app much more functional, letting the speaker keep a more natural pace during a conversation. We hope you find this app useful & we are working to make it even better in the future!

Can you hear me now? 1 in 5 in U.S. suffers hearing loss

By Linda Carroll for MSNBC, November 14th, 2011

Nearly one in five Americans has significant hearing loss, far more than previously estimated, a first-ever national analysis finds.

That means more than 48 million people across the United States have impairments so severe that it’s impossible for them to make out what a companion is saying over the din of a crowded restaurant, said Dr. Frank Lin, author of a new study published in the latest issue of the Archives of Internal Medicine.

“It’s pretty jaw-dropping how big it is,” said Lin, an assistant professor of otolaryngology and epidemiology at the Johns Hopkins School of Medicine.

Previous estimates had pegged the number affected by hearing loss at between 21 million and 29 million.

Lin and other researchers were surprised at the magnitude of the problem, but the significance of the findings goes beyond the “wow” factor, he said.

That’s because other studies have shown that hearing decline is often accompanied by losses in cognition and memory. Further, Lin said, some studies have associated hearing loss with a greater risk of dementia.

Lin’s study is the first to look at the hearing loss in a national sample of Americans aged 12 and older who have actually had their hearing tested. Earlier studies were smaller or depended on people’s self-reports of hearing loss.

Full Story...

http://vitals.msnbc.msn.com/_news/2011/11/13/8785941-can-you-hear-me-now-1-in-5-in-us-suffers-hearing-loss

Closed Capp is a part of New York Startup Weekend!

11/19/2011

We are actively working on upgrading this service to make a smoother experience and more useful app. Please leave your info if you would like to hear about our progress.

Microsoft to unveil a ‘breakthrough’ in speech recognition

29 August '11, 08:56pm

Earlier today, Microsoft Research released a blog post promising that at Interspeech 2011, an event that is underway, the company would unveil a ‘breatkthrough’ in speech recognition.

Importantly, the development does not deal with speech recognition that requires the user to ‘train’ the system, but instead involves “real-time, speaker-independent, automatic speech recognition.” In other words, true recognition of human speech.

Microsoft claims that it has managed to “dramatically improve the potential” of this sort of technology becoming commercially functional. Through the use of deep neural networks, the company has managed to improve the accuracy of ‘on the go’ speech recognition, something that is a near holy grail of technology. How the team managed to execute the breakthrough is exceptionally technical, but we will not summarize it here because it is a topic that requires extensive background knowledge to follow. Microsoft’s blog post has all the information, if you’re curious.

In regards to the results of what Microsoft Research has built, this is the crucial revelation: “The subsequent benchmarks achieved an astonishing word-error rate of 18.5 percent, a 33-percent relative improvement compared with results obtained by a state-of-the-art conventional system.” The company claims that this has “brought fluent speech-to-speech applications much closer to reality.”

That said, this remains very much a research project. The company made that abundantly clear in the discussion of its progress.

This project is not simply an interesting technical problem, but something that Microsoft desperately needs solved. The company is forging ahead with what it calls Natural User Interface integration (think the Kinect, voice to text, and so forth), and so it needs a better voice solution. The company must have its eyes on its Research division, pushing them towards a commercially viable product that can be integrated across the world of its products.

For now, this is one step, albeit an important one.